Boosting

Welcome to an in-depth exploration of the fascinating world of boosting algorithms, a cornerstone in the field of machine learning and artificial intelligence. This comprehensive article will delve into the intricacies of boosting, its historical development, various techniques, and real-world applications. Through a blend of technical insights and practical examples, we aim to provide an insightful guide for both novice enthusiasts and seasoned professionals in the field.

Understanding the Basics of Boosting

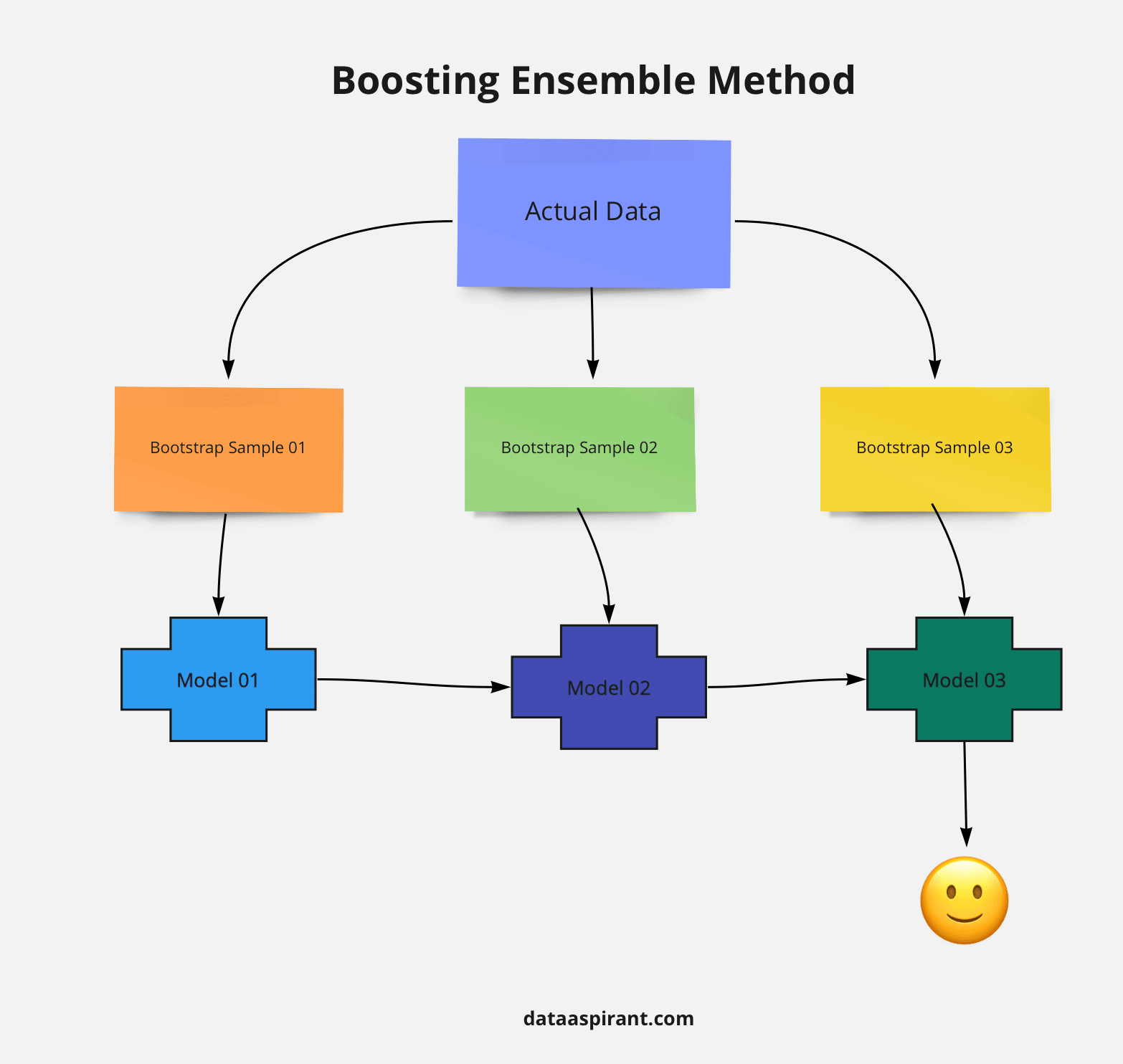

Boosting is a powerful ensemble learning technique that aims to enhance the performance of machine learning models by combining multiple weak learners into a strong learner. The concept behind boosting is rooted in the idea that a diverse set of simple models, when combined effectively, can outperform any individual model, even if that model is more complex.

The fundamental principle of boosting involves training a sequence of models, where each subsequent model aims to correct the mistakes made by its predecessors. This iterative process of learning and refinement results in a robust and accurate predictive model. The key to boosting's success lies in its ability to focus on challenging examples, giving them higher priority in the learning process, thus improving the overall generalization performance of the model.

Historical Evolution of Boosting

The journey of boosting algorithms in machine learning began with the introduction of AdaBoost (Adaptive Boosting) in 1995 by Yoav Freund and Robert Schapire. AdaBoost, a groundbreaking algorithm, set the stage for the development of various boosting techniques. It was designed to work with decision trees, combining them into a powerful ensemble, and it demonstrated remarkable success in binary classification tasks.

Over time, the field of boosting has evolved significantly. Researchers and practitioners have explored and developed numerous variations of boosting algorithms, each with its unique strengths and applications. Some notable advancements include Gradient Boosting, which utilizes gradient descent to minimize a loss function, and XGBoost, an optimized distributed gradient boosting library that has gained immense popularity for its efficiency and scalability.

Key Components of Boosting Algorithms

At its core, boosting algorithms consist of three primary components: weak learners, base learners, and the boosting process. Weak learners are simple models, often decision stumps (a decision tree with a single split), which individually may not perform well but can be combined to form a strong learner. Base learners, on the other hand, are the building blocks of the ensemble, typically decision trees of varying depths.

The boosting process involves a series of iterative steps. In each iteration, the algorithm focuses on the instances misclassified by the previous model, giving them higher weights. This process continues until a predefined stopping criterion is met, such as reaching a specified number of iterations or achieving a desired level of performance.

Techniques in Boosting

Boosting encompasses a wide array of techniques, each with its unique approach to creating strong learners from weak ones. Let’s explore some of the most prominent boosting algorithms and their characteristics.

AdaBoost (Adaptive Boosting)

AdaBoost, as mentioned earlier, was the pioneering boosting algorithm. It works by assigning weights to instances based on their misclassification in the previous model. Instances that are misclassified are given higher weights, thus forcing the subsequent models to focus on these challenging examples. AdaBoost is known for its simplicity and effectiveness, especially in binary classification tasks.

Gradient Boosting

Gradient Boosting is a more advanced technique that utilizes gradient descent to minimize a loss function. Unlike AdaBoost, which focuses on instance weights, Gradient Boosting focuses on optimizing the model parameters. It works by fitting a model to the negative gradient of the loss function, thus improving the model’s predictions in each iteration. Gradient Boosting is highly versatile and can be applied to various types of problems, including regression and classification tasks.

XGBoost (Extreme Gradient Boosting)

XGBoost is an optimized implementation of Gradient Boosting, designed for efficiency and scalability. It has become a go-to choice for many practitioners due to its exceptional performance and ability to handle large datasets. XGBoost introduces various enhancements, such as regularization techniques and parallel processing, which make it faster and more accurate than traditional Gradient Boosting algorithms.

LightGBM (Light Gradient Boosting Machine)

LightGBM is another popular Gradient Boosting algorithm that focuses on efficiency and speed. It employs a novel technique called Gradient-based One-Side Sampling (GOSS) to select instances for training, which reduces the computational complexity. LightGBM is particularly useful for large-scale datasets and has gained popularity for its fast training and inference times.

CatBoost (Categorical Boosting)

CatBoost is a specialized boosting algorithm designed to handle categorical data effectively. It addresses the challenges associated with categorical features by employing an ordered boosting technique. CatBoost has shown impressive performance in tasks involving high-cardinality categorical data, making it a valuable tool for practitioners working with such datasets.

Applications of Boosting in Real-World Scenarios

Boosting algorithms have found extensive applications across various industries and domains. Their ability to handle complex datasets and improve predictive performance has made them indispensable tools for data scientists and machine learning practitioners.

Fraud Detection

In the financial industry, boosting algorithms play a crucial role in fraud detection systems. By training models on historical transaction data, boosting techniques can identify patterns and anomalies that may indicate fraudulent activities. The iterative nature of boosting allows these models to continuously learn and adapt, making them highly effective in detecting new and evolving fraud schemes.

Image Classification

Boosting algorithms have proven their worth in the field of computer vision, particularly in image classification tasks. By combining multiple weak learners, such as decision trees, boosting techniques can accurately classify images into various categories. This has significant implications in areas like medical imaging, where accurate classification of medical scans can lead to improved diagnosis and treatment planning.

Natural Language Processing

In the realm of natural language processing (NLP), boosting algorithms have been employed for tasks such as sentiment analysis, named entity recognition, and text classification. By treating each word or phrase as a feature, boosting models can learn complex patterns in text data, enabling more accurate predictions. This has applications in areas like customer feedback analysis, social media monitoring, and content categorization.

Healthcare Diagnostics

Boosting algorithms have shown promise in healthcare diagnostics, particularly in disease prediction and patient monitoring. By analyzing large datasets of patient records, boosting models can identify patterns and risk factors associated with various diseases. This has the potential to revolutionize healthcare by enabling early detection and personalized treatment plans.

Performance Analysis and Comparison

When choosing a boosting algorithm for a specific task, it’s essential to consider various factors, including the nature of the dataset, the complexity of the problem, and the computational resources available. Let’s analyze the performance of some popular boosting algorithms and discuss their strengths and weaknesses.

| Boosting Algorithm | Advantages | Disadvantages |

|---|---|---|

| AdaBoost | Simple and effective for binary classification. Robust to noise and outliers. | May not perform well with high-dimensional data. Prone to overfitting if not properly tuned. |

| Gradient Boosting | Highly versatile. Can handle various types of problems, including regression and multi-class classification. Efficient in optimizing model parameters. | Requires careful hyperparameter tuning. Prone to overfitting if not regularized properly. |

| XGBoost | Exceptionally fast and accurate. Handles large datasets efficiently. Includes regularization techniques for better generalization. | May require more computational resources compared to simpler boosting algorithms. |

| LightGBM | Extremely fast training and inference times. Efficient for large-scale datasets. Employs GOSS technique for improved performance. | Less mature compared to other popular boosting algorithms. May require additional tuning for optimal performance. |

| CatBoost | Specialized for handling categorical data. Effective in tasks involving high-cardinality features. Ordered boosting technique reduces overfitting. | Not as versatile as other boosting algorithms. May not be suitable for all types of problems. |

Future Implications and Research Directions

The field of boosting algorithms continues to evolve, with ongoing research exploring new techniques and applications. Here are some exciting future directions and potential advancements:

- Development of more efficient and scalable boosting algorithms, capable of handling larger and more complex datasets.

- Exploration of hybrid boosting techniques that combine the strengths of different algorithms to further enhance performance.

- Application of boosting in novel domains, such as reinforcement learning and deep learning, to improve the performance of these models.

- Research into automated hyperparameter tuning and model selection techniques for boosting algorithms, making them more accessible to practitioners.

- Investigation of boosting's potential in explainable AI, helping to interpret and understand the decisions made by complex ensemble models.

Conclusion

Boosting algorithms have emerged as a powerful tool in the machine learning arsenal, offering a robust approach to improving predictive models. Through this comprehensive guide, we have explored the fundamentals, techniques, and real-world applications of boosting. From its humble beginnings with AdaBoost to the cutting-edge advancements like XGBoost and LightGBM, boosting continues to push the boundaries of what’s possible in machine learning.

As the field evolves, boosting algorithms will undoubtedly play a pivotal role in shaping the future of artificial intelligence and its impact on various industries. We hope this article has provided valuable insights and knowledge, empowering readers to leverage the power of boosting in their own machine learning endeavors.

How does boosting differ from other ensemble learning techniques like bagging?

+Boosting and bagging are both ensemble learning techniques, but they differ in their approach. Bagging, or bootstrap aggregating, involves training multiple models on different subsets of the data and then combining their predictions. In contrast, boosting focuses on sequentially training models, with each model learning from the mistakes of its predecessors. This iterative process allows boosting algorithms to improve over time, making them more accurate than bagging in many cases.

What are some common challenges in implementing boosting algorithms, and how can they be addressed?

+One common challenge is overfitting, where the model becomes too specialized to the training data and performs poorly on new, unseen data. To address this, regularization techniques, such as early stopping or adding a regularization term to the loss function, can be employed. Additionally, careful hyperparameter tuning and cross-validation can help prevent overfitting and improve the generalization performance of boosting models.

Are there any scenarios where boosting algorithms may not be the best choice, and what alternatives can be considered?

+Boosting algorithms may not be the best choice for problems with small datasets or when the data is highly imbalanced. In such cases, other ensemble methods like bagging or random forests might be more suitable. Additionally, for problems with a large number of features or high-dimensional data, simpler models or feature selection techniques might be preferred to avoid overfitting.