Computer Parallelism

Computer parallelism has emerged as a powerful approach to enhancing computational efficiency and performance. As modern applications demand ever-increasing processing power, leveraging parallelism has become essential for achieving optimal outcomes. In this comprehensive exploration, we delve into the intricacies of computer parallelism, examining its fundamental principles, diverse techniques, and transformative impact on modern computing landscapes.

Understanding the Principles of Computer Parallelism

At its core, computer parallelism revolves around executing multiple computational tasks simultaneously. This parallel processing paradigm stands in contrast to traditional sequential execution, where tasks are processed one after the other. By harnessing the potential of parallelism, we can significantly accelerate computational tasks, improve resource utilization, and tackle complex problems more efficiently.

The concept of parallelism finds its roots in the parallel processing capabilities of modern hardware architectures. With the advent of multi-core processors and distributed computing systems, computers have evolved to handle parallel workloads. These advancements have revolutionized the way we approach computational tasks, enabling us to tackle intricate problems that were once computationally infeasible.

In the realm of computer parallelism, there are two primary forms: task parallelism and data parallelism. Task parallelism involves breaking down a computational task into smaller, independent subtasks that can be executed concurrently. Each subtask operates on a separate processor or thread, allowing for simultaneous processing. On the other hand, data parallelism focuses on distributing data across multiple processing units, enabling parallel computations on different portions of the data.

Unraveling the Techniques of Computer Parallelism

The techniques employed in computer parallelism are diverse and tailored to specific computational scenarios. Here, we explore some of the key approaches that have revolutionized parallel computing:

Multithreading and Threading Models

Multithreading is a fundamental technique in computer parallelism, allowing multiple threads of execution to run concurrently. Each thread represents an independent path of execution, enabling the parallel processing of tasks. Threading models, such as the well-known POSIX Threads (pthreads) and Java’s Thread API, provide the necessary abstractions and tools for managing and synchronizing multiple threads.

By leveraging multithreading, developers can harness the power of parallel processing to enhance the performance of their applications. For instance, consider a scientific simulation involving complex mathematical computations. By dividing the simulation into multiple threads, each responsible for a specific subset of calculations, the overall execution time can be significantly reduced.

Parallel Programming Models

Parallel programming models offer high-level abstractions for expressing parallel computations. These models, such as OpenMP and MPI (Message Passing Interface), provide libraries and frameworks that simplify the development of parallel applications. They abstract the complexities of parallel execution, allowing developers to focus on writing parallel algorithms rather than managing low-level details.

OpenMP, for example, provides a set of compiler directives and library routines that enable shared-memory parallelism. It allows developers to annotate their code with parallel constructs, such as parallel loops and sections, to indicate where parallelism should be applied. MPI, on the other hand, is widely used in distributed computing environments, enabling communication and coordination between processes running on different nodes.

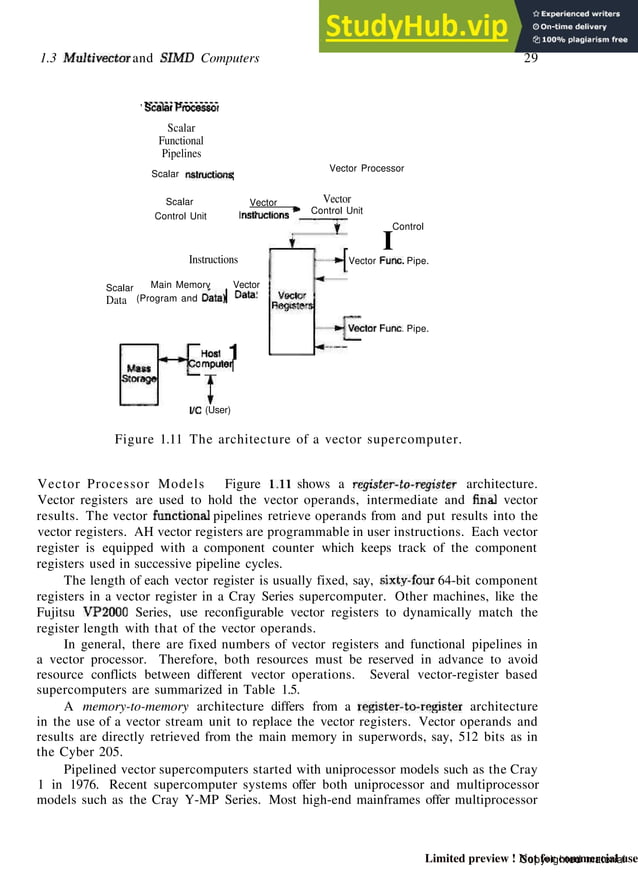

Vectorization and SIMD Processing

Vectorization is a technique that leverages Single Instruction, Multiple Data (SIMD) processing to achieve parallelism. In SIMD processing, a single instruction is applied to multiple data elements simultaneously. This approach is particularly effective for operations that can be expressed in terms of vector or matrix computations, such as image processing, signal filtering, and linear algebra.

Modern processors often come equipped with SIMD instructions, such as Intel's Advanced Vector Extensions (AVX) and ARM's Neon technology. These instructions enable the parallel execution of arithmetic and logical operations on multiple data elements within a single clock cycle. By vectorizing code to take advantage of SIMD capabilities, developers can achieve significant performance gains for certain types of computations.

GPU Computing and General-Purpose GPU (GPGPU)

Graphics Processing Units (GPUs) have evolved from being specialized hardware for graphics rendering to powerful general-purpose computing devices. GPU computing, also known as General-Purpose GPU (GPGPU) programming, harnesses the massive parallel processing capabilities of GPUs to accelerate a wide range of computational tasks.

GPUs excel at highly parallel computations, making them well-suited for tasks involving large datasets and complex computations. By offloading computationally intensive tasks to the GPU, developers can achieve substantial performance improvements. This is particularly beneficial for applications in scientific simulations, machine learning, and high-performance computing domains.

Performance Analysis and Optimizing Parallel Applications

The successful implementation of computer parallelism requires careful performance analysis and optimization. Understanding the characteristics of parallel algorithms and the underlying hardware architecture is crucial for achieving optimal performance.

Performance analysis involves measuring and evaluating various metrics, such as execution time, resource utilization, and scalability. By profiling parallel applications, developers can identify bottlenecks, optimize data structures, and tune algorithms to maximize performance. Tools like Intel's VTune Profiler and NVIDIA's Nsight Systems provide powerful capabilities for analyzing and optimizing parallel applications.

Furthermore, optimizing parallel applications often involves balancing the workload among processing units. Load balancing techniques ensure that tasks are distributed evenly, preventing any single processor from becoming a bottleneck. Adaptive load balancing algorithms, which dynamically adjust the workload based on runtime conditions, can further enhance performance and scalability.

Future Implications and Challenges

As computer parallelism continues to evolve, several key challenges and opportunities emerge. The increasing complexity of parallel algorithms and the growing demand for high-performance computing pose significant research and development challenges.

One of the primary challenges lies in scaling parallelism to ever-larger numbers of processors. As the number of processing units increases, managing parallelism becomes more intricate. Developing efficient parallel algorithms and runtime systems that can effectively harness the potential of massive parallelism is a critical area of research.

Additionally, energy efficiency and power consumption considerations play a crucial role in the future of computer parallelism. As parallel systems become more powerful, they also consume more energy. Developing energy-efficient parallel algorithms and optimizing hardware architectures to minimize power consumption are essential for sustainable computing.

Looking ahead, the future of computer parallelism holds immense promise. With the continuous advancement of hardware technologies and the growing adoption of parallel programming paradigms, we can expect significant breakthroughs in computational efficiency and performance. As researchers and developers continue to push the boundaries of parallelism, we will witness the emergence of new parallel algorithms, programming models, and hardware architectures that revolutionize the way we compute.

Conclusion

Computer parallelism has revolutionized the world of computing, offering unparalleled opportunities for accelerating computational tasks and tackling complex problems. By harnessing the power of parallelism, we can unlock new levels of performance and efficiency. From multithreading and parallel programming models to vectorization and GPU computing, the techniques and technologies available today empower developers to create highly parallel applications.

As we navigate the evolving landscape of computer parallelism, it is crucial to stay abreast of the latest advancements and best practices. By understanding the principles, techniques, and challenges associated with parallelism, we can develop robust and efficient parallel applications that drive innovation and advance the state of the art in computing.

What are the key benefits of computer parallelism?

+Computer parallelism offers several advantages, including enhanced computational efficiency, improved resource utilization, and the ability to tackle complex problems more effectively. By executing multiple tasks simultaneously, parallelism accelerates computations and enables the processing of large datasets at unprecedented speeds.

How does computer parallelism differ from sequential execution?

+Sequential execution involves processing tasks one after the other, while computer parallelism enables the simultaneous execution of multiple tasks. This fundamental difference allows parallelism to significantly reduce the overall execution time of computational tasks, making it a powerful approach for optimizing performance.

What are some real-world applications of computer parallelism?

+Computer parallelism finds applications in a wide range of fields, including scientific simulations, machine learning, high-performance computing, image and signal processing, and more. It enables faster computations, improved data processing capabilities, and enhanced problem-solving abilities in these domains.