Parallel Program

Welcome to this comprehensive exploration of the fascinating world of parallel programming, a field that has revolutionized the way we approach computational tasks. In today's digital age, where processing power is paramount, parallel programming has emerged as a crucial technique to maximize efficiency and performance. This article will delve into the intricacies of parallel programming, providing you with an expert-level understanding of its concepts, applications, and future prospects.

Unraveling the Power of Parallel Programming

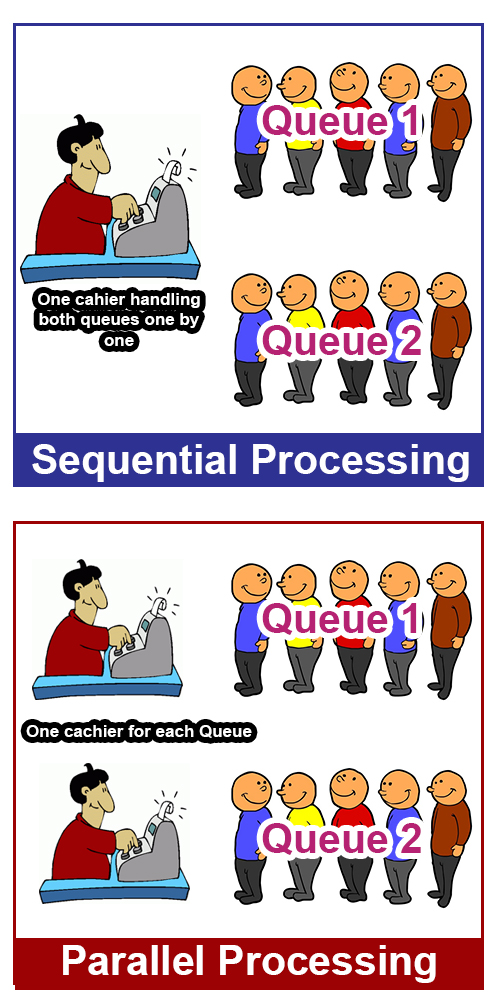

Parallel programming is a computational paradigm that allows multiple processes or threads to execute simultaneously, harnessing the power of modern multi-core processors and distributed systems. It enables the division of complex tasks into smaller, more manageable chunks that can be executed concurrently, thereby reducing the overall execution time and improving resource utilization.

The concept of parallel programming has its roots in the quest for higher computational efficiency. As single-core processors reached their performance limits, the need for a new approach became evident. Parallel programming provides a solution by leveraging the inherent parallelism present in many computational problems, enabling the execution of multiple tasks in parallel, thereby increasing throughput and reducing latency.

Key Principles of Parallel Programming

At its core, parallel programming revolves around several fundamental principles:

- Parallelism: The ability to execute multiple tasks simultaneously, utilizing multiple processors or cores.

- Concurrency: Managing the execution of multiple tasks that may overlap in time, ensuring efficient resource utilization.

- Synchronization: Coordinating the execution of parallel tasks to maintain data consistency and avoid race conditions.

- Load Balancing: Distributing tasks evenly across available resources to maximize efficiency and minimize idle time.

These principles form the backbone of parallel programming, guiding developers in designing efficient parallel algorithms and applications.

Applications of Parallel Programming

The applications of parallel programming are vast and diverse, spanning across various industries and domains. Here are some notable areas where parallel programming plays a crucial role:

- High-Performance Computing (HPC): Parallel programming is the backbone of HPC systems, enabling complex simulations, scientific computations, and data analysis tasks to be executed efficiently.

- Big Data Processing: With the explosion of data in today’s digital landscape, parallel programming is essential for distributed data processing, enabling efficient analysis of massive datasets.

- Machine Learning and AI: Parallel programming techniques are integral to training complex neural networks and running machine learning algorithms, which often require massive computational resources.

- Real-Time Systems: Parallel programming is used in real-time applications, such as gaming, robotics, and autonomous systems, to ensure timely response and efficient resource management.

- Distributed Systems: In distributed computing environments, parallel programming enables efficient utilization of resources across multiple interconnected systems.

Performance Analysis

The performance of parallel programs is a critical aspect to consider. Various factors influence the efficiency of parallel execution, including the problem’s inherent parallelism, the chosen parallel programming model, and the hardware architecture. Understanding these factors is essential for optimizing parallel programs.

One common metric used to evaluate the performance of parallel programs is speedup, which measures the improvement in execution time when using parallel processing compared to a sequential implementation. Speedup is often calculated using Amdahl's Law, which takes into account the serial fraction of the program, i.e., the portion that cannot be parallelized.

Another crucial aspect of performance analysis is scalability. A parallel program is considered scalable if it can maintain or improve its performance as the number of processors or cores increases. Scalability is often assessed by examining the program's speedup curve, which should ideally exhibit linear or near-linear behavior.

| Number of Cores | Execution Time (Sequential) | Execution Time (Parallel) | Speedup |

|---|---|---|---|

| 1 | 1000 ms | N/A | N/A |

| 2 | 1000 ms | 500 ms | 2x |

| 4 | 1000 ms | 250 ms | 4x |

| 8 | 1000 ms | 125 ms | 8x |

In the table above, we can see an example of a parallel program's speedup as the number of cores increases. Ideally, the speedup should increase proportionally with the number of cores, indicating efficient utilization of resources.

Parallel Programming Models and Techniques

Parallel programming offers a myriad of models and techniques, each suited to different types of problems and hardware architectures. Here, we explore some of the most prominent parallel programming paradigms and their unique characteristics.

Multithreading

Multithreading is a common parallel programming technique that involves the execution of multiple threads concurrently within a single process. Each thread shares the same memory space, allowing for efficient communication and data sharing. Multithreading is particularly useful for tasks that involve I/O operations or require multiple concurrent computations.

Popular multithreading APIs include Java's java.lang.Thread and C++'s std::thread, which provide developers with the necessary tools to create and manage threads.

Message Passing Interface (MPI)

MPI is a widely-used parallel programming model, particularly in the realm of high-performance computing. It enables the development of distributed parallel applications, where processes communicate and coordinate their tasks through message passing. MPI provides a rich set of communication functions, allowing processes to exchange data efficiently.

MPI is often the go-to choice for large-scale parallel computations, such as those performed on supercomputers or clusters of machines. Its flexibility and extensive feature set make it a powerful tool for complex parallel programming tasks.

OpenMP

OpenMP is a popular parallel programming API for shared memory systems, providing a set of directives and library routines that can be used to express parallelism in C, C++, and Fortran programs. It allows developers to easily parallelize loops and sections of code, taking advantage of multi-core processors without the need for explicit thread management.

OpenMP is particularly well-suited for parallelizing computationally intensive tasks, such as matrix operations or numerical simulations, making it a popular choice in scientific computing and engineering applications.

GPU Programming

With the rise of Graphics Processing Units (GPUs) as powerful computational accelerators, GPU programming has become a significant parallel programming paradigm. GPUs offer massive parallel processing capabilities, allowing thousands of threads to execute simultaneously. Programming languages like CUDA and OpenCL provide APIs for developing parallel applications that utilize the immense computational power of GPUs.

GPU programming is widely used in machine learning, computer vision, and scientific simulations, where the massive parallelism of GPUs can significantly accelerate computations.

Functional Programming and MapReduce

Functional programming, with its emphasis on pure functions and immutability, lends itself well to parallel programming. Languages like Haskell and Erlang provide powerful tools for concurrent and parallel programming. Additionally, the MapReduce framework, popularized by Google, offers a parallel programming model for distributed computing, allowing large datasets to be processed in parallel across a cluster of machines.

Challenges and Future Directions

While parallel programming has undoubtedly revolutionized computational efficiency, it comes with its own set of challenges. Managing concurrency, ensuring data consistency, and optimizing parallel algorithms are complex tasks that require careful consideration.

Looking ahead, the future of parallel programming holds exciting possibilities. With the continued development of multi-core processors and distributed systems, parallel programming will become even more crucial. The rise of quantum computing also presents new opportunities and challenges, as quantum algorithms may require fundamentally different parallel programming approaches.

Furthermore, the increasing demand for real-time applications and the Internet of Things (IoT) will drive the need for efficient parallel programming techniques to manage the vast amounts of data and computations involved. Edge computing and distributed cloud architectures will also play a significant role in shaping the future of parallel programming.

Key Takeaways

- Parallel programming is a powerful technique for maximizing computational efficiency by executing multiple tasks simultaneously.

- It is widely used in high-performance computing, big data processing, machine learning, and real-time systems.

- Key principles include parallelism, concurrency, synchronization, and load balancing.

- Performance analysis is crucial, with speedup and scalability being key metrics.

- Various parallel programming models, such as multithreading, MPI, OpenMP, GPU programming, and functional programming, offer different approaches to parallel computation.

- Challenges include managing concurrency and optimizing parallel algorithms, while future prospects lie in quantum computing, real-time applications, and distributed computing.

Conclusion

Parallel programming is a dynamic and rapidly evolving field, offering immense potential for improving computational efficiency and solving complex problems. By understanding the principles, applications, and techniques of parallel programming, developers can unlock the full power of modern multi-core processors and distributed systems. As technology continues to advance, parallel programming will remain a crucial tool in the digital landscape, shaping the future of computing and enabling innovative solutions.

What is the main advantage of parallel programming over sequential programming?

+Parallel programming allows multiple tasks to be executed simultaneously, leading to significant improvements in performance and resource utilization. This is particularly beneficial for computationally intensive tasks, enabling faster execution times and better scalability.

How do I choose the right parallel programming model for my application?

+The choice of parallel programming model depends on various factors, including the nature of the problem, the available hardware architecture, and the programming language used. It’s essential to consider factors like the degree of parallelism, data sharing requirements, and the need for explicit thread management when selecting a model.

What are some common challenges in parallel programming, and how can they be addressed?

+Common challenges in parallel programming include managing concurrency, ensuring data consistency, and optimizing parallel algorithms. Techniques such as careful synchronization, load balancing, and using appropriate data structures can help mitigate these challenges. Additionally, thorough testing and debugging are crucial for identifying and resolving parallel programming issues.